This article is an excerpt from the Shortform book guide to "Rebooting AI" by Gary Marcus and Ernest Davis. Shortform has the world's best summaries and analyses of books you should be reading.

Like this article? Sign up for a free trial here.

What are the limitations of artificial intelligence? Why does AI sometimes makes mistakes that seem obvious to humans?

In their book Rebooting AI, Gary Marcus and Ernest Davis explore the challenges facing AI systems. They discuss three key machine learning limitations: issues with training data, language processing problems, and difficulties in perceiving the physical world.

Read on to discover why AI isn’t as smart as you might think and what needs to change for machines to truly understand our world.

Machine Learning Limitations

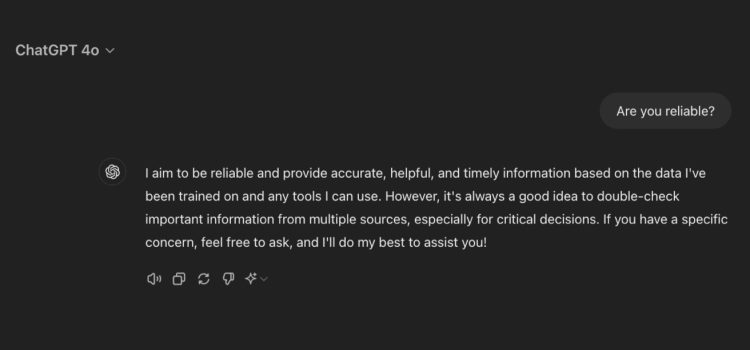

So far, AIs have been successful when they’ve been designed to do a single task. Their problem is reliability—even if they work most of the time, we never know when they’ll make nonsensical mistakes that no human ever would. Marcus and Davis blame AI’s problems on fundamental design deficiencies. They discuss three machine learning limitations: the nature of the data it’s trained on, problems with how computers process language, and the way that machines perceive the physical world.

Limitation #1: Data

Davis and Marcus’s main objection to training neural networks using large amounts of data is that, when this strategy is employed to the exclusion of every other programming tool, it’s hard to correct for a system’s dependence on statistical correlation instead of logic and reason. Because of this, neural networks can’t be debugged in the way that human-written software can, and they’re easily fooled when presented with data that don’t match what they’re trained on.

AI Hallucinations

When neural networks are solely trained on input data rather than programmed by hand, it’s impossible to say exactly why the system produces a particular result from any given input. For example, suppose an airport computer trained to identify approaching aircraft mistakes a flight of geese for a Boeing 747. In AI development, this kind of mismatch error is referred to as a “hallucination,” and under the wrong circumstances—such as in an airport control tower—the disruptions an AI hallucination might cause range from costly to catastrophic.

Marcus and Davis write that, when AI hallucinations occur, it’s impossible to identify where the errors take place in the maze of computations inside a neural network. This makes traditional debugging impossible, so software engineers have to “retrain” that specific error out of the system, such as by giving the airport computer’s AI thousands of photos of birds in flight that are clearly labeled as “not airplanes.” Davis and Marcus argue that this solution does nothing to fix the systemic issues that cause hallucinations in the first place.

Hallucinations and Big Data

AI hallucinations aren’t hard to produce, as anyone who’s used ChatGPT can attest. In many cases, AIs hallucinate when presented with information in an unusual context that’s not similar to what’s included in the system’s training data. Consider the popular YouTube video of a cat dressed as a shark riding a Roomba. No matter that the image is bizarre, a human has no difficulty identifying what they’re looking at, whereas an AI tasked with the same assignment would offer a completely wrong answer. Davis and Marcus argue that this matters when pattern recognition is used in critical situations, such as in self-driving cars. If the AI scanning the road ahead sees an unusual object in its path, the system could hallucinate with disastrous results.

Hallucinations illustrate a difference between human and machine cognition—we can make decisions based on minimal information, whereas machine learning requires huge datasets to function. Marcus and Davis point out that, if AI is to interact with the real world’s infinite variables and possibilities, there isn’t a big enough dataset in existence to train an AI for every situation. Since AIs don’t understand what their data mean, only how those data correlate, AIs will perpetuate and amplify human biases that are buried in their input information. There’s a further danger that AI will magnify its own hallucinations as erroneous computer-generated information becomes part of the global set of data used to train future AI.

Limitation #2: Language

A great deal of AI research has focused on systems that can analyze and respond to human language. While the development of language interfaces has been a vast benefit to human society, Davis and Marcus insist that the current machine language learning models leave much to be desired. They highlight how language systems based entirely on statistical correlations can fail at even the simplest of tasks and why the ambiguity of natural speech is an insurmountable barrier for the current AI paradigm.

It’s easy to imagine that, when we talk to Siri or Alexa, or phrase a search engine request as an actual question, that the computer understands what we’re asking; but Marcus and Davis remind us that AIs have no idea that words stand for things in the real world. Instead, AIs merely compare what you say to a huge database of preexisting text to determine the most likely response. For simple questions, this tends to work—but if your phrasing of a request doesn’t match the AI’s database, the odds of it not responding correctly increase. For instance, if you ask Google “How long has Elon Musk been alive?” it will tell you the date of his birth but not his age unless that data is spelled out online in something close to the way you asked it.

A Deficiency of Meaning

Davis and Marcus say that, though progress has been made in teaching computers to differentiate parts of speech and basic sentence structure, AIs are unable to compute the meaning of a sentence from the meaning of its parts. As an example, ask a search engine to “find the nearest department store that isn’t Macy’s.” What you’re likely to get is a list of all the Macy’s department stores in your area, clearly showing that the search engine doesn’t understand how the word “isn’t” relates to the rest of the sentence.

An even greater difficulty arises from the inherent ambiguity of natural language. Many words have multiple meanings, and sentences take many grammatical forms. However, Marcus and Davis illustrate that what’s most perplexing to AI are the unspoken assumptions behind every human question or statement. Given their limitations, no modern AI can read between the lines. Every human conversation rests on a shared understanding of the world that both parties take for granted, such as the patterns of everyday life or the basic laws of physics that constrain how we behave. Since AI language models are limited to words alone, they can’t understand the larger reality that words reflect.

Limitation #3: Awareness

While language-processing AI ignores the wider world, mapping and modeling objects in space is of primary concern in the world of robotics. Since robots and the AIs that run them function in the physical realm beyond the safety of mere circuit pathways, they must observe and adapt to their physical surroundings. Davis and Marcus describe how both traditional programming and machine learning are inadequate for this task, and they explore why future AIs will need a broader set of capabilities than we currently have in development if they’re to safely navigate the real world.

Robots are hardly anything new—we’ve had robotic machinery in factories for decades, not to mention the robotic space probes we’ve sent to other planets. However, all our robots are limited in function and the range of environments in which they operate. Before neural networks, these machines were either directly controlled or programmed by humans to behave in certain ways under specific conditions to carry out their tasks and avoid a limited number of foreseeable obstacles.

Real-World Complexity

With machine learning’s growing aptitude for pattern recognition, neural network AIs operating in the real world are getting better at classifying the objects that they see. However, statistics-based machine learning systems still can’t grasp how real-world objects relate to each other, nor can AIs fathom how their environment can change from moment to moment. Researchers have made progress on the latter issue using video game simulations, but Marcus and Davis argue that an AI-driven robot in the real world could never have time to simulate every possible course of action. If someone steps in front of your self-driving car, you don’t want the car to waste too much time simulating the best way to avoid them.

Davis and Marcus believe that an AI capable of safely interacting with the physical world must have a human-level understanding of how the world actually works. It must have a solid awareness of its surroundings, the ability to plan a course of action on its own, and the mental flexibility to adjust its behavior on the fly as things change. In short, any AI whose actions and decisions are going to have real-world consequences beyond the limited scope of one task needs to be strong AI, not the narrow AIs we’re developing now.

Exercise: Reflect on the Impact of AI in Your Life

AI is becoming omnipresent in our lives, including smartphone tools, search engines, automated product recommendations, and the chatbots that now write much of the news. However, Marcus and Davis argue that AI could be doing much more and that we overestimate what AI does because we don’t really understand its inner workings. Think about how you use AI at present and how that might shift in the future.

- What AI application do you use the most often, such as a search engine or the map app on your phone? Do you feel that it gives the best results that it can? In what way have you ever found its results frustrating?

- What experience have you had with AI errors and hallucinations? Given Marcus and Davis’s description of AI’s faults, what would you guess caused any errors you experienced?

- What’s one thing that you wish AI could do? If AI could develop that skill by learning to think more like a human being, do you feel that would be a good thing for society? Why or why not?

———End of Preview———

Like what you just read? Read the rest of the world's best book summary and analysis of Gary Marcus and Ernest Davis's "Rebooting AI" at Shortform.

Here's what you'll find in our full Rebooting AI summary:

- That AI proponents oversell what modern AI can accomplish

- How AI in its current form underdelivers on its creators’ promises

- The real danger—and promise—of artificial intelligence