This is a free excerpt from one of Shortform’s Articles. We give you all the important information you need to know about current events and more.

Don't miss out on the whole story. Sign up for a free trial here .

What is artificial general intelligence? Is AGI possible? Does it pose a threat to humans?

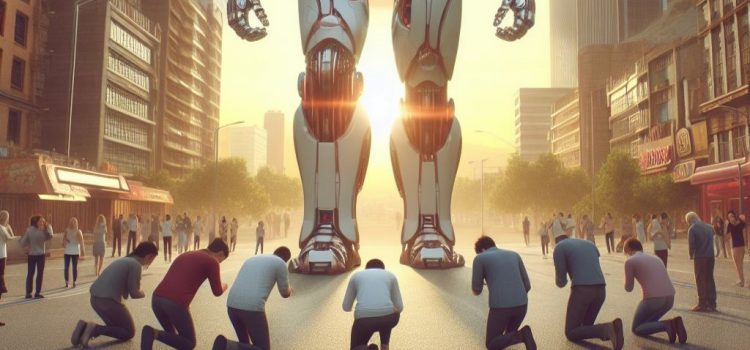

Artificial general intelligence (AGI) is a machine intelligence that understands the world as well as humans do. Hopes and fears about artificial intelligence reaching superhuman levels of skill or giving us a society run by robots are all predicated on the idea that we can build a type of AI that’s still hypothetical.

Here’s a look at what AGI is, and if it could be dangerous.

Is Artificial General Intelligence Inevitable?

Leaders at OpenAI, Google, and Meta have all said they aim to create artificial general intelligence (AGI). This kind of artificial intelligence would have a much broader range of skills than our current AI models.

It’s hard to sort out fact from fiction when it comes to the potential of AGI, in no small part because there’s little consensus among experts. Some worry that AGI is just around the corner, while others wonder, “Is AGI possible?”

What Is Artificial General Intelligence?

Experts haven’t agreed on a technical definition for “artificial general intelligence,” but it’s broadly thought of as machine intelligence that understands the world as well as humans do. That might sound straightforward enough, but understanding is difficult.

Because AGI would need cognitive flexibility, creativity, and the ability to problem-solve, some researchers worry that machine intelligence could develop some form of autonomy that would let it sidestep human control or even work toward goals it sets for itself. But answers to even the most fundamental questions about what, exactly, AGI would entail and how we would know when we’ve reached it are more elusive than you might think.

Can AI Become Smarter Than Humans?

OpenAI, which created ChatGPT, defines AGI as “AI systems that are generally smarter than humans.” But what does it mean to become “smarter” than humans? Intelligence looks different in machines than it does in humans. Machines have better memories, a faster ability to gather information, and faster processing speeds than humans. But humans apply imagination and intuition to their problem-solving, allowing us to solve novel problems.

As AI grows more sophisticated, it’s likely to become better at tasks that resemble human intelligence. But even optimistic predictions say it’s unlikely to replicate human intelligence fully.

Does AGI Pose a Threat to Humans?

There’s also no consensus on another big question: whether advanced AI poses a threat to society or humanity. One concern is that an AI model with broad cognitive skills and wide knowledge might evolve to define its own goals and values, which might conflict with ours.

Another worrying observation is that models often learn unanticipated lessons from the datasets they’re trained on, resulting in unexpected and potentially dangerous behavior. Yet another anxiety is that once AI advances enough in its ability to improve itself, it could become impossible for us to control it.

While some researchers focus on the potential for long-term harm, others have sounded the alarm about more immediate risks, including AI’s potential to cause job loss, perpetuate social biases and discrimination, worsen inequality, violate privacy, and spread misinformation.

Will AGI Become a Reality? If So, When?

Another contentious question is when AI could advance to something like AGI. Some researchers think AGI has already arrived with GPT-4, the model that underlies ChatGPT. They note that GPT-4 is skilled at tasks like writing, drawing, and coding, and that it’s already better than humans at things like taking standardized tests.

But other experts say this doesn’t mean GPT-4, in its current form, understands those tasks or reasons through them. They note that AI only repeats ideas that already exist, rather than creating something new. Even training a model on an enormous dataset doesn’t give it the creativity, empathy, or real-world experience to comprehend that knowledge.

Google DeepMind’s Shane Legg estimates a 50% chance of achieving AGI by 2028. Anthropic’s Dario Amodei predicts “human-level” AI could arrive in the next two to three years, and OpenAI’s Sam Altman thinks AGI is four or five years away. But these executives are outliers: A Fall 2023 survey of 2,778 AI researchers predicted a 50% chance that “high-level machine intelligence” will arrive by 2047, and a 10% chance that it could be a reality by 2027.

Want to fast-track your learning? With Shortform, you’ll gain insights you won't find anywhere else .

Here's what you’ll get when you sign up for Shortform :

- Complicated ideas explained in simple and concise ways

- Smart analysis that connects what you’re reading to other key concepts

- Writing with zero fluff because we know how important your time is