This article is an excerpt from the Shortform summary of "The Innovator's Dilemma" by Clayton M. Christensen. Shortform has the world's best summaries of books you should be reading.

Like this article? Sign up for a free trial here .

How did the microprocessor get developed? What happened over the course of Intel microprocessor history?

Intel microprocessor history covers the period beginning in the 1970s. Intel microprocessor evolution happened by accident and wasn’t initially seen as a disruptive innovation.

Read more about Intel microprocessor history.

Accidentally Disruptive: Intel Microprocessor History

In the 1970s, Intel successfully navigated the rise of disruptive microprocessing technology—though it was not through strategic decision-making by management.

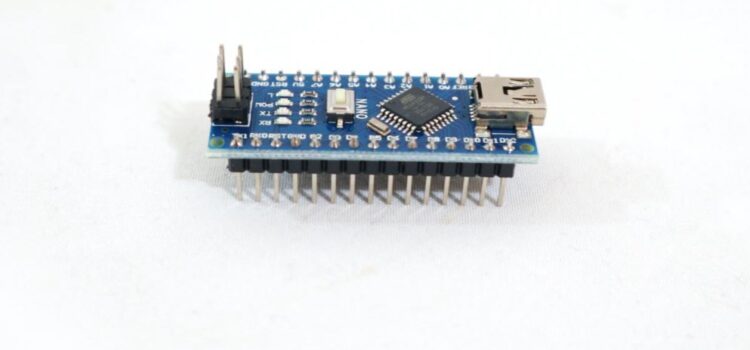

Microprocessors emerged at a time when standard computers relied on complicated circuitry. Although microprocessors had less capacity than standard circuitry, they were also smaller and simpler—typical assets of disruptive products.

Intel microprocessor evolution started with a project to develop the first microprocessor with a Japanese calculator manufacturer. After the project was over, Intel bought the patent from the Japanese company. Intel had no plan for marketing the technology, so it just sold it to customers upon request.

Intel was a leader in the dynamic random access memory (DRAM) market, and that’s where the company’s executives focused. However, the personal computer market was emerging, and microprocessors turned out to be perfect for the rising technology.

The company used an automated system to determine how it allocated resources and, unbeknownst to executives, the system saved the company from stumbling in the face of disruptive technology. Microprocessors were earning high gross margins while DRAM’s margins dipped, so the automated system shifted increasing resources to microprocessors. Ultimately, Intel became the leading microprocessor maker in the world.

Intel’s forecasts about the market for microprocessors were completely wrong, and the company’s automated system was its saving grace. After IBM decided to use Intel’s microprocessors as a critical component in IBM’s new personal computer model—which was a major victory for Intel—Intel’s forecasts still didn’t list PCs among the 50 top applications for the microprocessors. Given the history of Intel microprocessors, that’s an interesting conclusion.When were microprocessors made? It started with a project with a Japanese calculator maker. See more in this Intel microprocessor history.Intel’s forecasts about the market for microprocessors were completely wrong, and the company’s automated system was its saving grace. After IBM decided to use Intel’s microprocessors as a critical component in IBM’s new personal computer model—which was a major victory for Intel—Intel’s forecasts still didn’t list PCs among the 50 top applications for the microprocessors. Given the history of Intel microprocessors, that’s an interesting conclusion.

———End of Preview———

Like what you just read? Read the rest of the world's best summary of Clayton M. Christensen's "The Innovator's Dilemma" at Shortform .

Here's what you'll find in our full The Innovator's Dilemma summary :

- Christensen's famous theory of disruptive innovation

- Why incumbent companies often ignore the disruptive threat, then move too slowly once the threat becomes obvious

- How you can disrupt entire industries yourself