What happens when AI systems get better at mimicking human emotions and creating emotional connections? How can we ensure that, with AI, truth remains a priority?

In his book Nexus, Yuval Noah Harari reveals how AI’s growing ability to generate human-like content poses unprecedented challenges to our relationship with truth. AI systems are becoming increasingly skilled at creating emotional connections while potentially compromising accuracy and authenticity.

Keep reading to explore why the quest for truth in AI systems matters more than ever, and what we can do about it.

AI & Truth

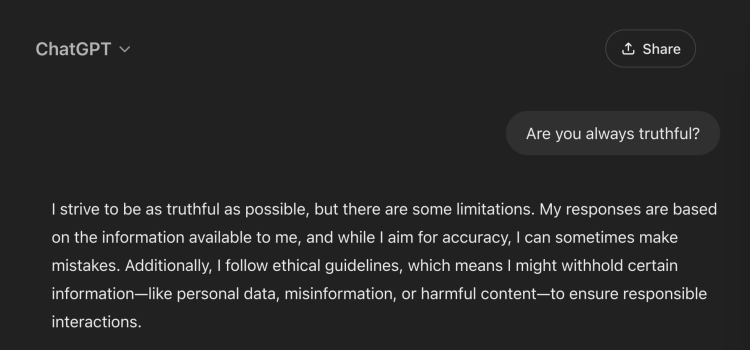

Scientists have made it possible to build AI models that can generate language and tell stories just like humans. Harari contends that AI’s ability to create compelling stories and produce the illusion of emotions (and emotional intimacy) is where its real danger lies. When we talk to an AI-powered chatbot like ChatGPT, it’s easy to lose sight of the fact that these systems aren’t human and don’t have a vested interest in telling the truth. That will only become harder to recognize as AI gets better and better at mimicking human emotions—and creating the illusion that it thinks and feels like we do. So it will only become easier for us to lose sight of the fact that, with AI, truth isn’t being prioritized when it selects and generates information for us.

(Shortform note: Some experts are skeptical that AI can ever develop human-like emotions. But that hasn’t stopped users from striking up romantic relationships with chatbots—or researchers from trying to teach AI the cognitive empathy to recognize and respond to human emotions. This could have interesting implications: Klara and the Sun author Kazuo Ishiguro sees creating art as one of the most interesting things AI could do if it develops empathy or learns to understand the logic underlying our emotions. Ishiguro says that if AI can create something that makes us laugh or cry—art that moves people and changes how we see the world—then we’ll have “reached an interesting point, if not quite a dangerous point.”)

Harari says that AI already influences what information we consume: An algorithm—a set of mathematical instructions that tell a computer what to do to solve a problem—chooses what you see on a social network or a news app. Facebook’s algorithm, for example, chooses posts to maximize the time you spend in the app. The best way for it to do that is not to show you stories that are true, but content that provokes an emotional reaction. So it selects posts that make you angry, fuel your animosity for people who aren’t like you, and confirm what you already believe about the world. That’s why social media feeds are flooded with fake news, conspiracy theories, and inflammatory ideas. Harari thinks this effect will only become more pronounced as AI is curating and creating more of the content we consume.

(Shortform note: While Harari discusses them both, it’s important to realize that an algorithm isn’t synonymous with AI. But algorithms represent a necessary component of AI systems because each algorithm is a set of instructions that are used to define the process an AI system will use to make a given decision. Once that process is defined, the AI model can use the data it has access to—both the data it’s provided to complete a task and, indirectly, the data it was trained on when it was first learning to complete the task—to follow the algorithm’s instructions, go through the outlined process, and make the decision.)

How to Fix It: Pay Attention to What’s True

Harari argues that we need to take deliberate steps to tilt the balance in favor of truth as AI becomes more powerful. While his proposed solutions are somewhat abstract, he emphasizes two main approaches: being proactive about highlighting truthful information and maintaining decentralized networks where information can flow freely among institutions and individuals who can identify and correct falsehoods.

| What Do Other Experts Think of the Solutions Harari Recommends? Harari’s recommendations sometimes raise more questions than they answer. While he suggests that the general public should take an active role in promoting truthful information, he doesn’t specify how individuals can meaningfully counter the influence of tech giants that control our primary information platforms. Similarly, his call for decentralized networks doesn’t contend with the reality that a handful of powerful corporations currently dominate how most people access and share information. While Harari argues that we must hold tech companies accountable and demand transparency in how they develop and deploy AI, he stops short of endorsing specific policies or regulations. Other experts have proposed concrete measures like antitrust legislation, mandatory AI safety standards, or independent oversight boards, but Harari focuses more on raising awareness about AI’s dangers than prescribing particular fixes. His central message is clear—we must be intentional about how we develop and use artificial intelligence—but the exact path to achieving this remains undefined. While Harari raises valid concerns about AI’s ability to manipulate public discourse, some critics argue his view is too apocalyptic. They point out that current AI systems still heavily depend on human inputs, prompts, and oversight. Some experts believe we need a more nuanced approach that focuses on aligning AI development with human values and ethical frameworks, addressing the conditions and decisions that give AI its power. In other words, Harari’s perspective that AI poses an imminent existential threat to humanity may overestimate AI’s current capabilities—and underestimate human agency. |