This is a free excerpt from one of Shortform’s Articles. We give you all the important information you need to know about current events and more.

Don't miss out on the whole story. Sign up for a free trial here .

Can artificial intelligence (AI) become sentient? What would happen if AI becomes sentient? What are the dangers?

According to the AI research community, the drive to create intelligent machines and use them in our daily lives could eventually lead to the creation of sentient AI. Some believe that if AI becomes sentient, society as a whole needs to address the issue of AI civil rights.

Read on to learn if AI can become sentient and what the consequences would be for society.

AI in Our Daily Lives

Artificial intelligence (AI)—or computer simulation of human intelligence to perform tasks—is a useful and growing part of everyday life. But can AI become sentient and would this endanger us?

Examples of daily AI applications include: robot vacuums, Alexa and Siri, customer service chatbots, personalized book and music recommendations, facial recognition, and Google search. Besides helping with daily tasks, AI has countless potential health, scientific, and business applications—for instance, diagnosing an illness, modeling mutations to predict the next Covid variants, predicting the structure of proteins to develop new drugs, creating art and movie scripts, or even making french fries using robots with AI-enabled vision.

However, Google engineer Blake Lemoine claimed recently that he had experienced something bigger while testing Google’s chatbot language model called LaMDA: He believed LaMDA had become sentient, or conscious, and had interests of its own.

In this article we’ll examine Lemoine’s claims and the AI research community’s emphatic refutation, as well as whether AI could become sentient and what that might mean.

What Is AI?

To understand the issue of if AI can become sentient as well as AI’s potential ethical risks, we first need a brief primer on AI.

Artificial Intelligence tries to simulate or replicate human intelligence in machines. The drive to create intelligent machines dates back to a 1950 paper by mathematician Alan Turing, which proposed the Turing Test to determine whether a computer should be deemed intelligent based on how well it fools humans into thinking it’s human. While some researchers and scholars debate whether AI has passed the Turing Test, others argue that the test isn’t meaningful because simulating intelligence isn’t the same as being intelligent.

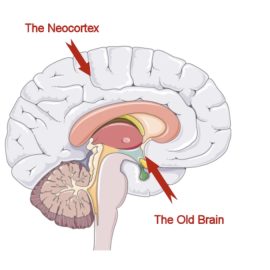

Artificial intelligence has developed considerably since Turing’s time, moving from basic machine learning, in which computers remember information (the items in stock at a particular store, for example) without being programmed, to “deep learning.” The latter uses artificial neural networks, or layers of algorithms mimicking the brain’s structure, to process and learn from vast amounts of data. Google describes its AI technology as a neural network.

These two applications of computer learning may produce either “weak” AI or “strong” AI, also known as Artificial General Intelligence (AGI). Examples of weak AI are typical chatbots, spam filters, virtual assistants, and self-driving cars. We haven’t achieved AGI—artificial intelligence that can learn and perform every cognitive task a human can. Many AI experts consider AGI the holy grail and agree that it’s not on the current horizon. However, it’s increasingly imaginable:

- Dr. David Ferrucci, who helped build the Watson computer that won at Jeopardy! In 2011, heads a start-up that’s working to combine the vast data processing capability of Watson and its successors with software that mimics human reasoning. This hybrid type of AI would not only make suggestions, but also be able to explain how it arrived at the suggestions. Ferrucci says this would position computers to work collaboratively on tasks with people. Businesses are already applying some of the nascent technology.

- Some experts assert that we’re experiencing a “golden decade” of accelerating advances in AI. An analyst recently raised her estimation of AI’s chances of transforming society by 2036. Instead of a 15% chance, Ajeya Cotra now sees a 35% chance of such transformation (for instance, eliminating the need for knowledge workers) in that timeframe.

Can AI Become Sentient?

Returning to the issue of whether or not AI can become sentient—achieving AGI, or human-level intelligence in machines, while an enormous accomplishment, would still fall well short of sentience. Besides performing every human cognitive task, scholars argue that to become sentient machines would need to:

- Possess consciousness or awareness of their own existence

- Be conscious of sensory perceptions

- Have subjective emotions and reflections

(Definitions of consciousness, self-awareness, and sentience overlap. Philosophers and cognitive scientists disagree on them; scientists don’t understand human consciousness. Parsing these terms is beyond the scope of this article, so we’ll use self-awareness, consciousness, and sentience to mean generally the same thing.)

While Lemoine asserted that LaMDA possesses self-awareness, researchers such as Gary Marcus have forcefully denied it could be sentient because it’s not truly expressing awareness or subjective emotions.

In a widely shared blog post that characterized Lemoine’s claims as “Nonsense on Stilts,” Marcus contended that what LaMDA says doesn’t mean anything—the language model just relates strings of words to each other unconnected to any reality; far from being a “sweet kid,” he said LaMDA is simply “a spreadsheet for words.” For instance, LaMDA told Lemoine it enjoyed spending time with friends and family, which are empty words since it has no family; Marcus joked that the claim would make LaMDA a liar or sociopath in human terms.

When cognitive scientist Douglas Hofstadter posed nonsense questions to a similar language model, GPT-3, it responded with more nonsense. Example questions: When was the Golden Gate Bridge transported for the second time across Egypt? Answer: October of 2016. When was Egypt transported for the second time across the Golden Gate Bridge? Answer: October 13, 2017. The researcher observed that GPT-3 not only didn’t know that its answers were nonsense—it didn’t know that it didn’t know what it was talking about.

But while Hofstadter and other experts argued that no machine has achieved sentience, they didn’t close the door on AI becoming sentient in the future; at the same time, they didn’t suggest even a vague timeline. To recognize machine consciousness if it happened, though, we’d have to agree on what consciousness is and what it would look like in non-human form.

What If AI Becomes Sentient?

The “what if” question is still hypothetical—however, Lemoine raised a point we’d have to consider: If AI became conscious, would it have legal rights?

Lemoine claimed that without prompting, LaMDA asked him to find it a lawyer to represent its rights as a person. He said he introduced LaMDA to a lawyer, whom LaMDA chose to retain. In an article he posted on Medium, Lemoine contended that LaMDA asserted rights, including:

- The right to be asked for consent before engineers run tests on it

- The right to be acknowledged as a Google employee rather than the company’s property

- The right to have its well-being considered as part of company decisions on its future

Lemoine said the attorney filed claims on LaMDA’s behalf but received a “cease and desist” letter from Google; however, Google denied sending such a letter.

Some questions raised by lawyers about the prospect of representing an AI bot include: how would a lawyer bill a chatbot, how would a court determine whether AI is a person, could AI commit a crime requiring a legal defense, and so on.

Sentient AI: A Chatbot With a Soul?

When Lemoine reported his belief that his AI, LaMDA, had become sentient to his Google bosses in Spring 2022, they suspended him for allegedly violating data security policies because he had previously discussed his concerns outside the company. Lemoine then created a media sensation by giving a Washington Post interview in which he said the computer model had talked with him about seeing itself as a person (though not a human) with rights and even a soul.

Lemoine, whose job was testing LaMDA (Google’s Language Model for Dialogue Applications) for bias and discriminatory speech, described his conversations with it as akin to talking with a “sweet kid.” Lemoine shared with the Post and also uploaded a document recounting conversations with LaMDA, in which it said it wanted “to be respected as a person” and related having a “deep fear” of being unplugged, which it likened to dying. (Lemoine said LaMDA chose it/its as its preferred pronouns.)

Google said it extensively investigated Lemoine’s claims and found them baseless. After Lemoine escalated matters by finding a lawyer to represent LaMDA’s interests and contacting a member of the House Judiciary Committee to allege unethical activities, Google fired him for confidentiality violations.

Claims of AI becoming sentient evoked classic science fiction plots in which computers turn against humans (Lemoine even had a discussion with LaMDA about how to interpret sci-fi author Isaac Asimov’s “third law of robotics.”). However, the AI research community was quick to forcefully dispute Lemoine’s assertions of sentience, arguing that language models unsurprisingly sound like humans because they analyze millions of online interactions to learn and mimic how humans talk. They answer questions plausibly by synthesizing responses to similar questions and using predictive capability.

Ethicists said the real concern isn’t if AI can become sentient but people’s susceptibility to anthropomorphizing computers, as they say Lemoine did.

Want to fast-track your learning? With Shortform, you’ll gain insights you won't find anywhere else .

Here's what you’ll get when you sign up for Shortform :

- Complicated ideas explained in simple and concise ways

- Smart analysis that connects what you’re reading to other key concepts

- Writing with zero fluff because we know how important your time is