This article is an excerpt from the Shortform book guide to "Being Wrong" by Kathryn Schulz. Shortform has the world's best summaries and analyses of books you should be reading.

Like this article? Sign up for a free trial here.

Do you laugh at your mistakes? Even more important, do you leverage your mistakes?

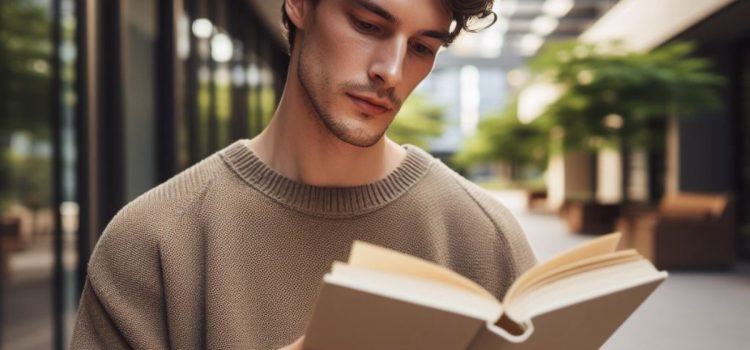

Kathryn Schulz’s book Being Wrong encourages readers to view errors as opportunities for growth rather than sources of shame. The book examines how perceptions of reality become distorted and offers strategies for gracefully accepting your fallibility.

Continue reading for an overview of this book that will help you enjoy, as Schulz puts it, “adventures in the margin of error.”

Being Wrong Book Overview

Right now, at this very moment, you’re wrong. What you’re wrong about could be anything, from something as trivial as where you left your keys to something life-defining, such as a deeply held belief. Nevertheless, it’s doubtful you can come up with a list of things you’re wrong about off the top of your head. After all, it’s belief in our ideas that allows us to function, regardless of whether we’re wrong or right. Kathryn Schulz’s book Being Wrong, published in 2010, argues that making mistakes is an essential part of human nature and that it shouldn’t necessarily be our goal to eliminate every bit of error from our lives.

Instead, Schulz writes that we should change how we view mistakes so that we can leverage our common human foibles to help us learn, explore the world around us, and maybe even laugh at ourselves once in a while.

Schulz is a Pulitzer Prize-winning journalist whose work has covered a range of topics including immigration, civil rights issues, travel, cuisine, literary criticism, and the threat of earthquakes on the western US coast. Her work has appeared in magazines, newspapers, and online publications, including The New Yorker, Rolling Stone, The Nation, The Santiago Times, and Grist. She published her memoir, Lost & Found, in 2022.

Why Be Wrong?

The idea that being wrong about something can be good goes against what most of us are brought up to believe. We’re told that making mistakes is “only human,” but we’re also taught that making mistakes is a sign that we’re stupid or have low moral standing. This is wrong, Schulz argues. She says that what’s important is that we accept and learn from our mistakes because usually our mistakes don’t hurt us as much as reacting to them poorly does. We’ll begin by looking at how we define right and wrong and why clinging to our beliefs is a valid survival trait that nevertheless robs us of a healthy approach to error, even when those mistakes can enrich our lives.

Schulz doesn’t define right and wrong in terms of “truth,” instead focusing on the experience of changing your mind from one idea to another. This is because the traditional definition of “being wrong”—that you believe something is true when it isn’t—implies that there’s an underlying “truth” that every belief can be judged against. While this may apply to some situations, such as misremembering where you left your keys, it doesn’t hold for all situations, such as matters of personal taste or opinion. In these instances, we act as if opinions can be wrong, such as whether pickles taste good or bad, even if there’s no objective truth either way.

Right and Wrong as Survival Strategies

Right and wrong are both essential aspects of human cognition, says Schulz. Being “right”—assuming that beliefs are true and acting on them accordingly—is a survival tool we inherited from our prehistoric peers. After all, if you’re in the savannah and hear a noise you believe to be a lion, it’s better to act on that belief without question than to wait and study the matter in depth, increasing your risk of turning into a snack. As a result, being right feels good, and evolution rewards that feeling. However, Schulz argues that our ability to be wrong is also a survival skill in that it lets us imagine a different world than the one we live in—a world that, while technically “wrong,” helps us look past our limited perceptions to solve problems.

Since we like to cling to the feeling that we’re right, the conflicts we experience aren’t between “right” and “wrong,” but between opposing views of “right,” writes Schulz. Our society doesn’t even afford us a healthy common language for admitting to mistakes without associated shame. Instead, we prioritize being right above all else while happily pointing out the mistakes of others. When we’re forced to confront our errors, we’ll either shift the blame or disassociate ourselves from our wrongness with the classic line, “Mistakes were made.” This robs us of the lessons we might learn from opening ourselves to the chance that we’re wrong, thereby harming relationships between people, cultures, religions, and nations.

Being Wrong Is Useful

If feeling that we’re right is so ingrained in our thinking, how can we engage with being wrong in a way that doesn’t undermine everything we believe? Schulz holds up modern science as an example of how to view being wrong as a stepping stone toward truth. The scientific method of theory and experimentation embraces the possibility of error in a way that shines a light on how we can harness mistakes in other areas.

The way that science does this is by inverting the normal human mode of thinking. Whereas we, as individuals and institutions, begin from the premise that our ideas are true until proven otherwise, scientists deliberately assume that any new theory or discovery may be false until it’s been independently tested multiple times under a multitude of conditions. Schulz points out that, even when a new scientific idea has survived enough tests to be widely accepted, the testing and refinement of the theory never stops. The errors that do crop up along the way don’t derail scientific progress—instead, we see them as signposts toward the truth.

Being Wrong Is Fun

Beside being useful as a tool to advance human understanding, the fact that our ideas about the world can be wrong is something that brings joy to all of our lives. If you find this hard to believe, consider that the disparity between how each of us views the world from each other—a multitude of opinions that cannot all be “right”—is the genesis of humor, art, and the quirks that make each of us unique.

Some people believe the whole point of humor is to point out mistakes in a way that’s pleasant and easy to digest. At its basic level, such as classic “pratfall” humor, we laugh at other people’s mistakes, and—if we’re strong enough to take a joke—we laugh at errors of our own. Schulz points out that more complicated humor also revolves around harnessing wrongness—a comedian says something that creates an expectation, then shows that expectation to be wrong in a way that briefly confuses the listener until they “get the joke.” Not all humor works this way, but enough of it does that it’s one of comedy’s defining traits, as epitomized by the medieval “court jester” who was the only one allowed to make fun of the king.

What It’s Like to Be Wrong

It’s easier to consider the pros and cons of being wrong on an intellectual level than it is to truly experience being wrong. This is because of what’s known as “error blindness”—our inability to see our own mistakes in the moment. How we react to discovering we’re wrong depends on how much our thinking has to change and whether that change will be pleasant or unpleasant.

There are two distinct reactions you can have to finding out that you’re wrong about something. The first is revulsion—immediately after making a mistake, such as calling a friend by someone else’s name, you might say, “I feel sick,” “I want to throw up,” or even “shoot me now,” all of which are expressions of pain and the desire to end it quickly. But Schulz says this isn’t our only mode of feeling—in addition to the pain of certain types of error, we also feel elation at a happy surprise, such as when you run into a friend you hadn’t expected to see for a while. Both types of error, both pleasant and painful, define how we feel about being wrong, though we tend to feel the negative more often than the positive.

However, Schulz points out that the feelings we associate with being wrong always occur after the fact. In the instant you call your friend the wrong name or make an erroneous statement, you don’t feel as if you’re wrong at all, because in your mind you’re not. Therefore, the real-time experience of being wrong is no different from the experience of being right. What’s more, even when you discover that you’re wrong, you do so by replacing the wrong belief with a corrected belief. In other words, you don’t change from being wrong to right, you change from feeling that you’re right in one way to feeling that you’re right in a different way. The pain of mistakes is the pain of having been wrong, not that of being wrong in the present.

Schulz suggests that people don’t change a belief until they have a new one to replace the old one. We rarely, if ever, get stuck in the transition because, as a rule, we don’t reconsider beliefs in isolation—we always need two or more to compare, which can sometimes make changing a belief take a while.

Certainty and Doubt

How tightly we cling to any given belief falls somewhere on the spectrum between doubt and certainty. Schulz argues that certainty is the default position for more of our beliefs than we realize, and while that’s necessary for us to get through the day, it carries inherent dangers that only the discomfort of doubt can assuage.

To be certain about the basic rules of life is more than a comfort, it’s a necessity. Consider how many beliefs we take so much for granted that we don’t even think of them as beliefs. We’re sure that an object will fall if we drop it. We’re sure that the sun will come up in the morning. We’re sure we need food and water to survive. Schulz explains that our whole understanding of the world is built on a framework of beliefs so fundamental that it wouldn’t make sense to doubt any of them. How could you function for a moment if you doubted that gravity works or that you need air to breathe?

Schulz says that certainty gets us into trouble because many core beliefs are tightly wrapped up in our sense of identity—such as the belief that our tribe will protect us and therefore we owe it our allegiance in return. Anything that calls such a belief into question feels like a personal attack. We instinctively double down on being certain instead of giving in to the discomfort of doubt, so if someone suggests that our core beliefs are wrong, we stop listening and deny the other person’s views. We tell them they’re misinformed, or stupid, or worse. When the threat of doubting our core beliefs is existential, we may even decide the other person is evil and out to destroy all that’s good in the world.

When confronted with someone else’s blind certainty, it’s easy to see where absolute faith in your beliefs can fall short, but it’s hard to recognize that same failing in ourselves. Sometimes we admire doubt in other people, such as when someone admits that they were wrong, though often we view doubt as a sign of weakness. The truth is that doubt provokes anxiety, which is why we find certainty so appealing. Schulz writes that people who wear their doubt on their sleeve, such as undecided voters or religious agnostics, make others uncomfortable not because they’re undecided, but because they display an openness to their own potential for error that undermines the sense of certainty that everyone else relies on.

Why You’re Wrong

The fact that human error is practically a given implies that there’s something fundamentally wrong with how human beings perceive the world. That would be true if the purpose of the brain was to perfectly analyze information from your senses, but it’s not. Instead, the brain is optimized to make judgments quickly from limited data and choose the best behavior to promote our survival. As a result, our minds are built on heuristics—mental shortcuts to efficient, best-guess thinking based on limited information, mental models, and the collective judgment of whatever groups we’re a part of. According to Schulz, these tools make our minds amazingly efficient, but they’re also the loopholes through which we make mistakes.

Perception and Reason

The most basic and natural mistake that we make is to trust the evidence of our senses without question. The senses are the mind’s only window on the world, but we underestimate the degree to which that window is clouded by how the brain filters data through an unconscious interpretive process, followed by instinctive reasoning that doesn’t rely on strict rules of logic.

Schulz explains that our conscious minds don’t receive the “raw data” from our senses. Instead, the information we see, hear, and feel is touched up and processed by our unconscious nervous system, such as the way the brain’s visual cortex fills in the gaps between the still frames of a movie. Our brains are designed to fill in the gaps in any sensory data we receive. Doing so gives us a more cohesive awareness of our surroundings, which therefore aids our survival in the wild—but it also opens the door to error, particularly when the brain’s “best guess” to fill those gaps turns out to be wrong.

Our misperceptions are amplified when we use them to make decisions, a process which also relies on “best guess” logic more than we’d like to admit, writes Schulz. Rather than using careful logic to make decisions and judgments, our brains default to inductive reasoning—determining what’s likely to be true based on past experience. This mental shorthand allows for quick decisions that tend to be mostly right most of the time, but inductive reasoning also leads us into various cognitive traps, such as making overly broad and biased generalizations while ignoring information that doesn’t jibe with our beliefs. As with our sensory errors, our imprecise reasoning is a natural side effect of the processes that make our brains so efficient.

Belief and Imagination

Our potentially faulty judgments based on erroneous sensory data form the shaky foundations on which we build beliefs, giving us even more chances to be wrong. Our beliefs shape everything we do, and they’re so interwoven and interconnected that admitting one of them is wrong can threaten our entire framework of understanding. Schulz discusses how beliefs are formed and how easily we invent them on the fly with little or no evidence to back them up.

Beliefs are stories we tell about the world. We’re conscious of some, such as beliefs about money, but unconscious of others, such as which way is “down.” We cling to some beliefs very tightly, while others change more easily, depending on how important they are. Schulz says that we automatically form beliefs about every new thing or idea we encounter, because otherwise we wouldn’t have a way to determine how to act or predict what will happen. This belief formation is a two-pronged approach—part of your mind creates a story to explain what your senses tell you, while another part of your mind checks your story against further input from your senses. Either side of this process can break down, resulting in beliefs that are wrong.

We’ve already mentioned how senses can fail, but the storytelling aspect of your brain goes wrong when you spin beliefs from sheer imagination. Schulz acknowledges that imagination is an evolutionary gift that lets us solve problems we’ve never faced before, but it goes wrong when we invent stories without any evidential grounding. We do this because our minds crave answers, so we feed them by making up theories. Admitting that you don’t know something is more uncomfortable than pretending that you do, hence the temptation to believe things too strongly.

Social Pressure to Believe

Being wrong doesn’t happen only on an individual level. We don’t form our beliefs on our own, and history has shown that large groups of people can all be wrong at once. Try as you might, there’s no way to avoid learning beliefs and behaviors from the people around you, and when you’re firmly embedded in a group, any fallacies in the thinking of that group are only reinforced by the strength of group identity.

“Think for yourself” is common advice, but unfortunately, you can’t do it. We all rely on other people’s knowledge—there’s too much in the world to learn on our own. The problem is telling whether or not someone else’s beliefs are worth sharing. Schulz argues that we generally don’t judge someone else’s beliefs on the merit of their ideas. Instead, we first decide if someone else is trustworthy—if they are, we accept their beliefs. This is a time-saving shortcut that lets us learn from teachers and parents, determine which news articles to read, and decide which opinion podcasts to listen to. However, this shortcut opens up a world of error because it multiplies our own faulty judgment by that of many others. Mistakes spread like a plague.

Schulz says that, in years past, we formed many of our beliefs based on the groups we were raised in, but, in the Information Age, we seek out and form groups based on shared beliefs. Group consensus is a powerful drug, and in a group based on common ideas, belief in those ideas is self-reinforcing, while any evidence against them is ignored by the strength of the group’s willful blindness.

When social status and group membership are defined by your agreement with certain beliefs, then any dissent is an attack on the group and can be punished by shunning, expulsion, or worse. Human beings are social animals, and it’s easier to go along with questionable ideas than lose your group status.

How to Cope With Being Wrong

We’ve all felt the pain of making small mistakes—acknowledging the big ones throws us into turmoil. If you find out you’ve been wrong on a major level, especially about a core belief that part of your worldview hinges upon, it can trigger a full-blown existential crisis. As with grief, there are stages you’ll inevitably go through, including gauging the scope of how wrong you’ve been, denying your error or perhaps defending it, before hopefully accepting that you were wrong and finding a way to grow in response.

Schulz says that, when you learn you’ve been wrong, your first question will be to ask, “By how much?” Determining the scale of how wrong you are determines how many of your beliefs you’ll have to change, and how truthfully you can answer that question depends on how well you can take the emotional punch of admitting your mistakes. Your initial response will also include a measure of denial as a defense mechanism. Short-term denial isn’t necessarily bad. It can give you enough emotional breathing room to face up to your mistake once the initial shock has passed. Long-term denial is a different story. Instead of healthy growth, it’s rooted in deceit—lying to others and lying to yourself.

No two people react to being wrong in the same way, though Schulz writes that there are patterns. The storytelling part of your brain will generate theories about why you were wrong, and many of these will be defensive in nature. You may tell yourself that you were almost right, or that you were only wrong in certain ways—like arguing your company’s new product would have succeeded if only the timing of its release had been better. You might shift the blame for being wrong on someone else, or you might claim that you’d been right to be wrong—that you were erring on the side of safety. Whatever your approach, what you’re defending against is the pain of being wrong, not the fact of your mistake.

Accepting That You’re Wrong

Though Schulz often claims that not all mistakes are bad, responding to them poorly never ends well, and the results of denial can sometimes be disastrous. Whether it’s minor or major in scope, the healthiest way to deal with a mistake is to accept it and use it to grow. To be ready for this, you have to be open to the possibility that you can be wrong, acknowledge that your beliefs are always changing, and face up to the fact that the fight against being wrong is a never-ending struggle.

The upside of learning you’ve been wrong about something is that it opens you up to change and exploration. First, however, Schulz recommends that you cultivate an openness to the chance you could be wrong about a great many things. The manufacturing industry already models this by thinking through every possible way the systems they design might fail. On a personal level, you can start to practice this by using the language of equivocation—“maybe, possibly, I’m not sure”—instead of speaking and thinking from a place of certainty. Our culture paints uncertainty as weakness, but that’s just another way that our culture may be wrong. Uncertainty helps you stay open to error and cushions the blow of admitting to mistakes.

To be sure, if you learn that you’ve been wrong in a way that’s fundamental to your sense of self—something that might change your religious beliefs or whether you want to continue in your career—you’re going to go through a painful transition. Nevertheless, Schulz points out that our identities, based on our changeable beliefs, are always in flux. Change is a natural, if painful, part of life that we all experience in one way or another. It may be easier for such change to happen slowly, but there’s always a sense of loss associated with it. The trick is to change your attitude toward being wrong so that you can see it for the lessons that it brings instead of the pain it makes you feel.

The final problem with admitting you’re wrong and adopting a different set of beliefs is dealing with the chance that you might still be wrong. Schulz writes that correcting your mistakes is a never-ending process, and unless you stumble on an absolute truth, you’ll have to deal with being wrong for the rest of your life. So is there a point in even trying? Of course! Not only is there value on the path of self-improvement, even if it’s a journey with no end, being open to your own capacity for error teaches you to be compassionate toward other people and the unique mistakes they make. The more that people can accept their own errors, the more open they are to new beliefs and ideas, and the more they can see the world through someone else’s eyes.

———End of Preview———

Like what you just read? Read the rest of the world's best book summary and analysis of Kathryn Schulz's "Being Wrong" at Shortform.

Here's what you'll find in our full Being Wrong summary:

- Why you shouldn’t try to completely avoid making mistakes

- The reasons why you get things wrong

- How to make mistakes without falling victim to shame and denial